top of page

Search

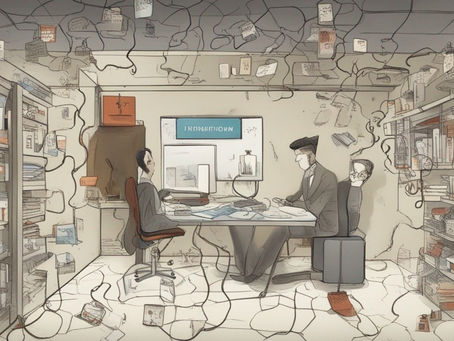

Exploring Information Entropy: From Brain Complexity to Daily Life

Information entropy is a powerful and versatile concept used to measure various properties of dynamic systems, including the human brain. It quantifies the amount of energy unavailable for work, the level of disorder in a system, and the uncertainty regarding a signal's message. In this article, we will delve into the multifaceted world of information entropy, exploring its applications in understanding the human brain, social systems, and its relevance in our daily lives. We

bottom of page